Today I was playing around with vSphere with Tanzu. I want to consume vSphere with Tanzu and...

vConsultants blog

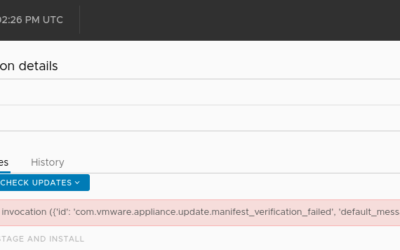

VCSA 7 U1 available updates error

by Harold Preyers | 2021, Mar 12 | vCenter, vSphere

Today I deployed a new VCSA 7 U1 and as U2 has GA'd recently I wanted to update the environment...

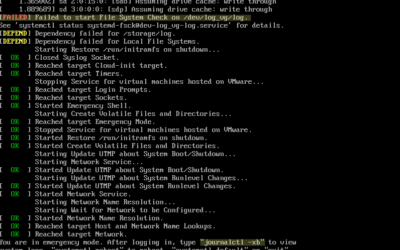

VCSA does not boot due to file system errors

by Harold Preyers | 2020, Dec 7 | vCenter

Where do I start troubleshooting?journalctl -xbFile System CheckOther volumesRebootRemark Due to a...

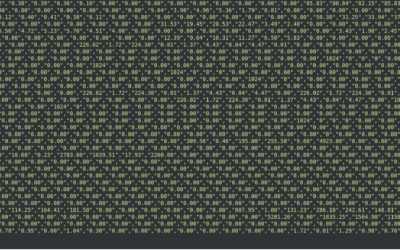

esxtop output is not displaying as it should

by Harold Preyers | 2020, Dec 4 | ESXi

When you connect to your ESXi host and you launch esxtop. You look at the esxtop output and it is...

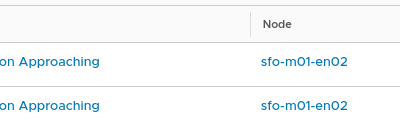

NSX-T password expiration alarms in the Home Lab

by Harold Preyers | 2020, Nov 20 | NSX

The challenge I have a couple of NSX-T environments in my home lab. I logged on to one of them and...

Upgrade Methodology – Upgrade the homelab

by Harold Preyers | 2020, Oct 27 | vSphere

This post is not as a end-to-end upgrade guide but a methodology guide. Everything is more or less...

Use iPerf to test NIC speed between two ESXi hosts

by Harold Preyers | 2020, Oct 26 | ESXi

Sometimes you want/need use iPerf to test the nic speed between two ESXi hosts. I did because I...

My first deploy with VMware Cloud Foundation

by Harold Preyers | 2019, Dec 30 | VMware Cloud Foundation

I have been working on a script to deploy environments on a regular basis in my homelab. While I...

Troubleshooting Cross vCenter vMotion UtilitY

by Harold Preyers | 2019, Oct 31 | Cross vCenter vMotion Utility

Struggling with errors while using the Cross vCenter vMotion Utility? This guide walks through...

Why You Should Attend VMUGBE 2019 – A VMware Community Event Worth Your Time

by Harold Preyers | 2019, Jun 4 | VMUG

Are you passionate about VMware technologies and eager to connect with like-minded professionals...