If you've ever enabled Workload Management in vSphere with Tanzu and later removed the Supervisor...

vConsultants blog

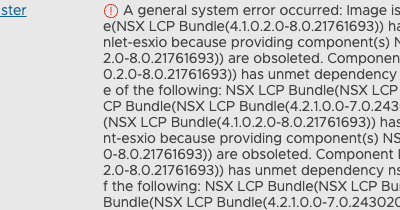

NSX Host Transport Nodes upgrade fails

by Harold Preyers | 2024, Oct 15 | NSX

While going through the latest lab upgrade round, I found myself running into an error when...

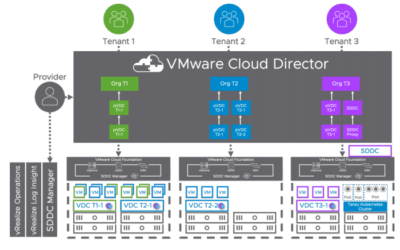

Quick Tip: VCD cell-management-tool without using credentials

by Harold Preyers | 2023, Jul 26 | VMware Cloud Director

Are you tired of constantly typing the password when executing cell-management-tool commands on...

Quick Update: NSX ALB documentation

by Harold Preyers | 2023, Jul 26 | Avi Load Balancer

To be honest, I have been complaining somewhat over the last year, or so, about the NSX Advanced...

Quick Tip: Setting up True SSO?

by Harold Preyers | 2023, Mar 2 | Omnissa Horizon

Are you setting up TrueSSO? Are you looking to use signed certificates to secure the communication...

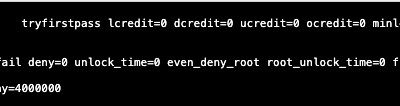

Photon OS 3.0 update the maximum failed OS login attempts

by Harold Preyers | 2023, Feb 28 | Photon OS

A client of mine was looking on how to update the maximum failed OS login attempts because they...

Deleting the datastore where a content library is hosted is probably not the best idea

by Harold Preyers | 2022, Apr 14 | Content Library

Deleting the datastore where a content library is hosted is probably not the best idea but ... yes...

Enable Workload Management does not finish

by Harold Preyers | 2022, Mar 14 | vSphere With Tanzu

Some time ago we were having issues in the Tanzu PoC class for partners we were teaching. One of...

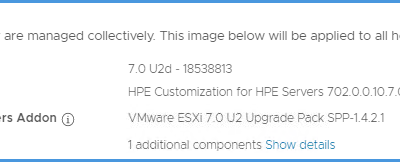

vLCM fails to upgrade a firmware component

by Harold Preyers | 2021, Dec 9 | vSphere

I recently experienced an issue within a HPE environment where vSphere Lifecycle Management (vLCM)...

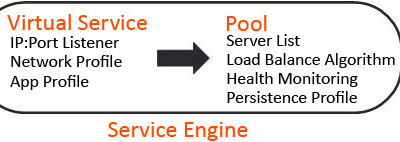

How to request Let’s Encrypt certificates on the NSX Advanced Load Balancer

by Harold Preyers | 2021, Jun 10 | Avi Load Balancer

INTRO Lately, I have been doing quite some work on VMware vSphere with Tanzu. A prerequisite to...